Follow the Power: Betting on AI's Infrastructure Backbone

An Investment Thesis Behind AI's $1 Billion Training Runs

"The thing that will gate us the most is power. It’s the biggest strategic constraint…We might run out of money before we run out of silicon.” warns Martin Lund, EVP of Hardware at Cisco.

Training AI is becoming astronomically expensive. Models like GPT-4 and Gemini Ultra cost $40M and $30M respectively, just for training runs. CEO of Anthropic, Dario Amodei, has stated that AI developers are likely to spend close to a billion dollars on a single training this year, and up to ten billion dollars training in the next two years

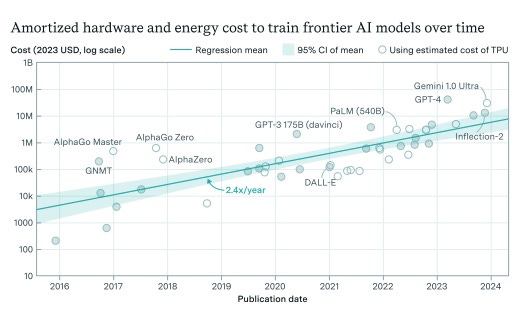

Based on new research by Cottier and Rahman (2024), training costs for frontier AI models have grown 2.4x annually since 2016.

These costs break down into:

Hardware (Chips & Computers): 47-65%

Brain Power (R&D staff): 29-49%

Energy: Currently 2-6%, but this seemingly small number hides a bigger problem

Why This Matters

Gemini Ultra already requires 35 megawatts - enough to power 25,000 homes. By 2029, AI training could require up to 1 GW. To put this in context, the top ten largest power plants in the United States have a capacity ranging from 3 GW to 7 GW

The Investment Thesis

Just as railroads and utilities powered the Industrial Revolution (see “The Upside of Tech Bubbles: Building Tomorrow's Infrastructure”). AI needs its own infrastructure backbone: data centers and a massive power supply. Instead of betting on which AI company wins, invest in what they all need to operate.

Investment Strategy: "Pick and Shovel" Play

Four Foundation Builders*:

NextEra Energy (NEE): Top renewable energy provider in anticipation of significant electricity demand growth from AI

Brookfield Renewable (BEP): Global clean energy partner, directly supporting AI infrastructure power needs

Digital Realty (DLR): Leading AI-ready data center provider

Vertiv (VRT): Specialized cooling solutions for high-density data centers, essential for managing AI computational heat generation

Just like the railways before them, these companies are critical for tomorrow's essential infrastructure. They may not make headlines like OpenAI or Anthropic, but they're providing the foundation these AI giants need to operate.

*key risks to monitor: Potential regulatory challenges, competition from new infrastructure providers, and breakthroughs in AI efficiency that could alter the trajectory of infrastructure needs.

Note: This analysis is based on current research and market conditions. Always do your own research before making investment decisions.

Those are my Thoughts From the DataFront

Max

More charts from the research report (if you are nerdy like me)

source: https://arxiv.org/pdf/2405.21015v1

Notable Substackers To Check Out:

Money Machine Newsletter: Market-beating insights in 5 minutes

The Technomist: Where business, economics, and technology meet

New Economies: Tech trends and startup ecosystem insights

Maverick Equity Research: Data-driven investment analysis

AI Supremacy: Decoding AI's impact on business and society

AI Disruption: Daily insights from an AI engineering veteran

VC Corner: Weekly startup ecosystem analysis

Update (Jan 28, 2025):

Some argue that China burns cheaper coals, so the energy consumed by Deepseek is cheaper. However it is important to note China's energy landscape has transformed dramatically over the past decade. While some think China relies mainly on cheap coal, the reality shows a strong shift toward clean energy. By the end of 2023, wind and solar power capacity had grown tenfold, with clean energy now generating over 58% of China's power. Coal use has dropped significantly, while clean energy use has risen from 15.5% to 26.4% of total energy consumption. The country has also become more energy efficient, using 26% less energy per unit of GDP than in 2012, while building expertise in renewable energy technology including wind, solar, nuclear, and hydropower systems.

Update (Jan 27, 2025):

DeepSeek's breakthrough in AI training efficiency raises important questions about our infrastructure thesis. While more efficient models might reduce individual training costs, the impact on total infrastructure demands remains unclear. Historical patterns suggest technological efficiency often increases total system usage (think cars and gas stations) - but AI's rapid evolution means we need to watch carefully how these efficient models perform at scale before drawing firm conclusions about future power demands.

Please share your opinions