Darwin vs. DeepMind: Why AGI Is Further Than You Think

The real difference between finding patterns and creating breakthrough ideas

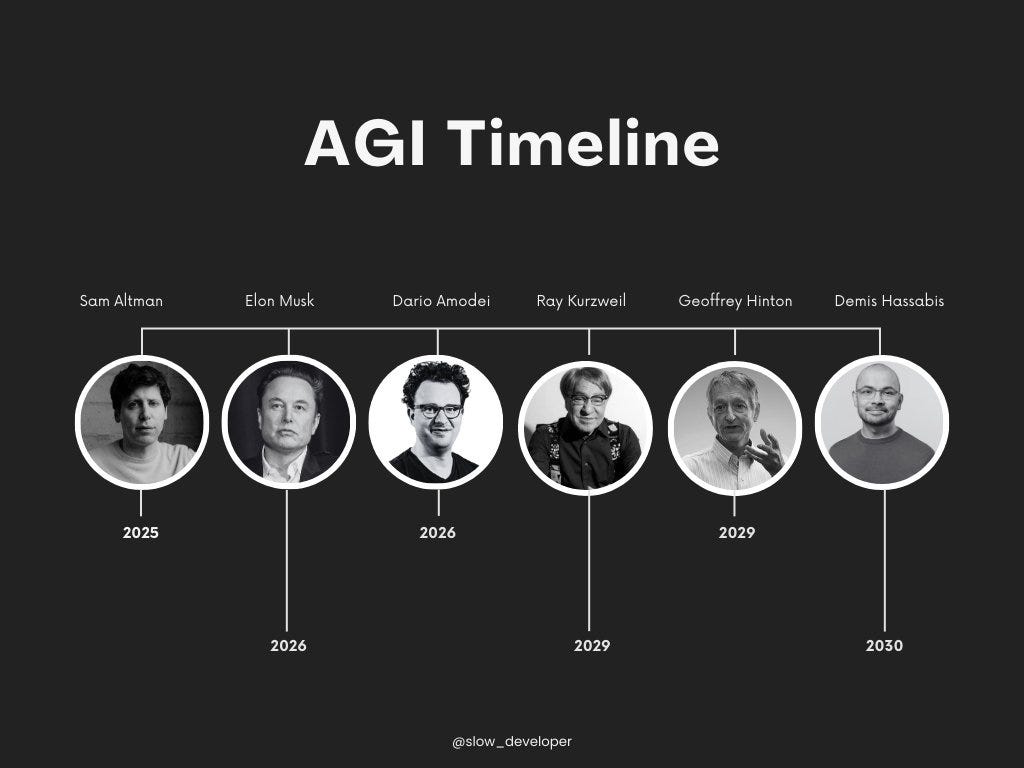

Tech leaders are making bold predictions about Artificial General Intelligence (AGI). Sam Altman (OpenAI) thinks we'll have AGI by 2025. Dario Amodei (Anthropic) says 2026. Demis Hassabis (Google Deepmind) is betting on 2027. But before we either panic or celebrate, let's understand what AGI really means and why these predictions might be missing something fundamental about intelligence.

Understanding AI

Today's AI: A computer system that learns from huge amounts of data. After studying millions of examples, it spots patterns and uses them to do tasks. That's why ChatGPT can write essays, Midjourney can create art, and Tesla cars can drive themselves - they've studied so many examples that they know what usually comes next.

AGI: A computer system that would think and learn just like humans do. It wouldn't just follow patterns it's seen before - it would understand problems deeply and come up with completely new solutions. Unlike today's AI that needs to be trained on specific tasks, AGI would learn anything on its own and use that knowledge to solve any problem, even ones it's never seen before. We can describe what we want AGI to do, but nobody knows how to build it yet.

The key difference:

Today's AI = finds patterns in what already exists

AGI = would create new ideas and solve problems in totally new ways

Are We Really Getting Closer to AGI?

While AI has gotten amazingly good at finding patterns in existing data, it's still missing something uniquely human - the ability to imagine entirely new patterns.

Let’s look at two contrasting examples of intelligence:

AI Thinking (Pattern Finding): In 2016, AlphaGo made to famous moves (Move 37 and Move 78) against Lee Sedol that shocked everyone. While these moves seemed like mistakes initially, they were actually winning strategies that humans had overlooked. Was this creative? Not really - AlphaGo had simply discovered hidden patterns in millions of existing games.

Human Thinking (Pattern Creation): Consider Darwin's theory of evolution. While observing how water carved the Grand Canyon, Darwin made a revolutionary mental leap: "What if living things change the same way - gradually over time?" This wasn't about finding patterns in data - it was about imagining something entirely new.

“The highest type of intelligence, says Aristotle, manifests itself in an ability to see connections where no one has seen them before, that is, to think analogically.”

— J. M. Coetzee

Why AGI is So Hard

To build AGI, we need computers that can:

Ask new questions, not just answer existing ones

Handle opposing ideas at the same time

Work well with missing information

Experience things subjectively to fuel creativity

What This Means for Your Business

While AGI might not arrive next year, today's AI can still be transformative. Here's how to approach it:

Use AI for pattern detection (customer behaviour, market trends, operational data)

Keep humans in charge of imagination (new products, strategies, innovations)

Build systems that combine AI's pattern-finding with human creativity

Instead of asking "When will AGI arrive?", ask yourself: "How can I use today's AI to make my team stronger?"

Those are my Thoughts From the DataFront

Max

Notable Substackers To Check Out:

Money Machine Newsletter: Market-beating insights in 5 minutes

VC Corner: Weekly startup ecosystem analysis

The Technomist: Where business, economics, and technology meet

New Economies: Tech trends and startup ecosystem insights

Maverick Equity Research: Data-driven investment analysis

AI Supremacy: Decoding AI's impact on business and society

AI Disruption: Daily insights from an AI engineering veteran

You lost me with the alpha go example. I wasn't aware about that specific moves and while that AI wasn't capable of anything else then playing Go it was a specialist for the opposite of what you actually wanted to imply: that AI system did NOT discover and "parrot" humans but it found novel moves humans never come up in millions of games over centuries. So while LLMs are trained with next token prediction they certainly already have the ability to connect dots and the line between synthetic intelligence, simulated intelligence, biological intelligence and biological banality is way more blurred than you hope for. Disclaimer: That's just a general statement, not in anyhow a prediction about AGI that would be a different discussion.

updated viewpoint based on latest news: December 2024

https://www.thoughtsfromthedatafront.com/p/beyond-pattern-matching-openais-o3